- Institute

- Research topics

- Organization

- Platforms

- Services

- Europe/International

- Science outreach

- Agenda

- Directory

- Access

To obtain a complete understanding of behavior, it is imperative to understand how individuals adapt their responses to the environment. In many animal species, vision is crucial in the acquisition and processing of external information. Information about space is then directly available to individuals through the light illuminating the environment. The eyes are then analogous to devices allowing the formation of an image on the retina and the visual field corresponds to the portion of the environment visible by the eye of the observer. All the visual information is then contained in the spherical projection of the visual field at the position of the eyes. The integration of this information allows animals to move appropriately in their environment.

Because of the geometric nature of vision, i.e. the projection of the environment, it appears to be a good starting point for exploring the relationship between sensory information and emergent behavior. How is the information contained in the projection of the visual field integrated by the individual to control his movement? Can we extract the behavioral constraints that are associated with different levels of complexity of the visual environment?

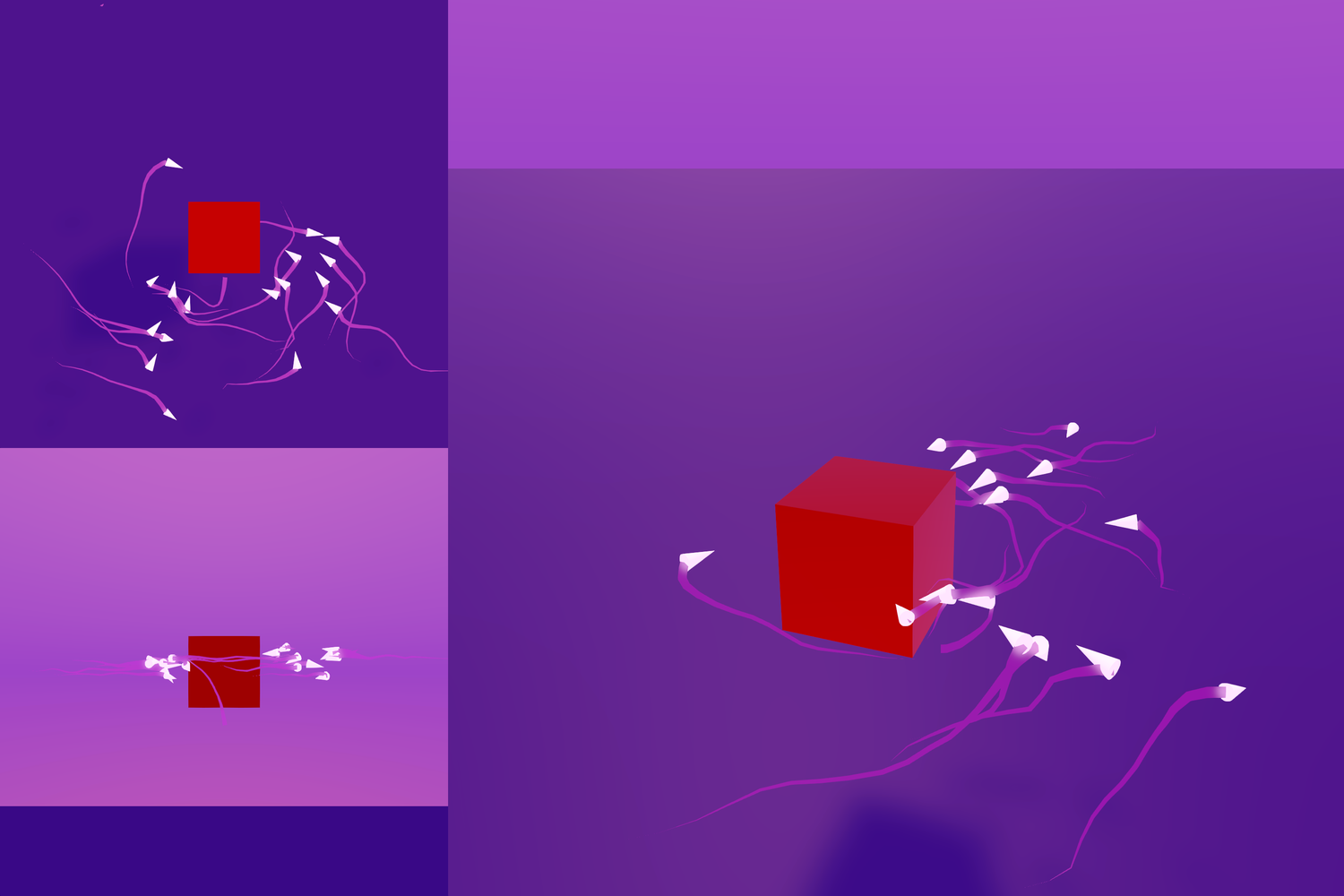

The study we propose will focus on the parrallel development of an integrated system for VR and a theoretical framework for the systematic analysis of the interactions with virtual objects. The idea behind virtual reality (VR) is to immerse individuals in a digitally recreated artificial environment that allows them to control and replace the information that these individuals perceive in their visual field. VR appears as an ideal tool to control the perceptual environment of individuals while studying their behaviors. A video game engine allows a dynamic and interactive control of a scene composed of virtual objects and thus produces the illusion of 3D objects in space.

Behavioral maps can be constructed continuously and exhaustively, based on the size of the objects presented, their shapes, colors, speeds, interactive responses, as well as their number. One of the advantages of VR controlled stimuli is the ability to test continuously specific parameters. It is possible to project a virtual object and provide results for different sizes of virtual objects. By continuously testing several parameters (motion, delay, and appearance), we can build a map of interactions between an individual and a virtual object. Some of these interactions are continuous, while others may exhibit discontinuity, potentially highlighting different forms of behavioral reactions. The kinematics of the interaction of an individual with an object gives a large but limited range of parameters. A systematic exploration of these parameters is possible. Pursuing my work on models of movements based on vision, it will then be possible to unravel the underlying representations of an individual.

Références:

Stowers, J. R., Hofbauer, M., Bastien, R., Griessner, J., Higgins, P., Farooqui, S., ... & Straw, A. D. (2017). Virtual reality for freely moving animals. Nature methods, 14(10), 995-1002.

Bastien, R., & Romanczuk, P. (2020). A model of collective behavior based purely on vision. Science advances, 6(6), eaay0792.

Naik, H., Bastien, R., Navab, N., & Couzin, I. (2019). Animals in virtual environments. arXiv preprint arXiv:1912.12763.

AAP TMBI 2022